Label Results Analysis Dashboard

Generated on: 2025-08-07 19:27:47

gemma-3-4b-it Analysis

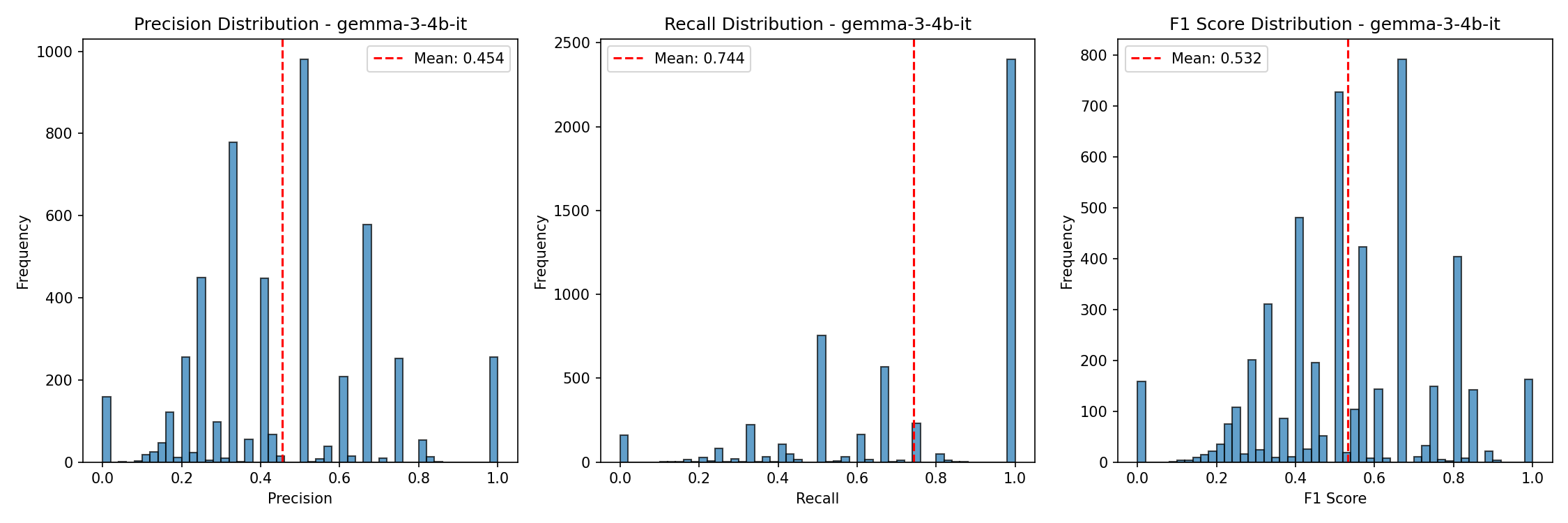

Overall Metrics

Precision

0.419

Recall

0.654

F1 Score

0.511

Accuracy

0.032

Dataset Statistics

Total Samples

5000

Total Categories

80

Avg Predictions/Image

4.7

Avg Ground Truth/Image

2.9

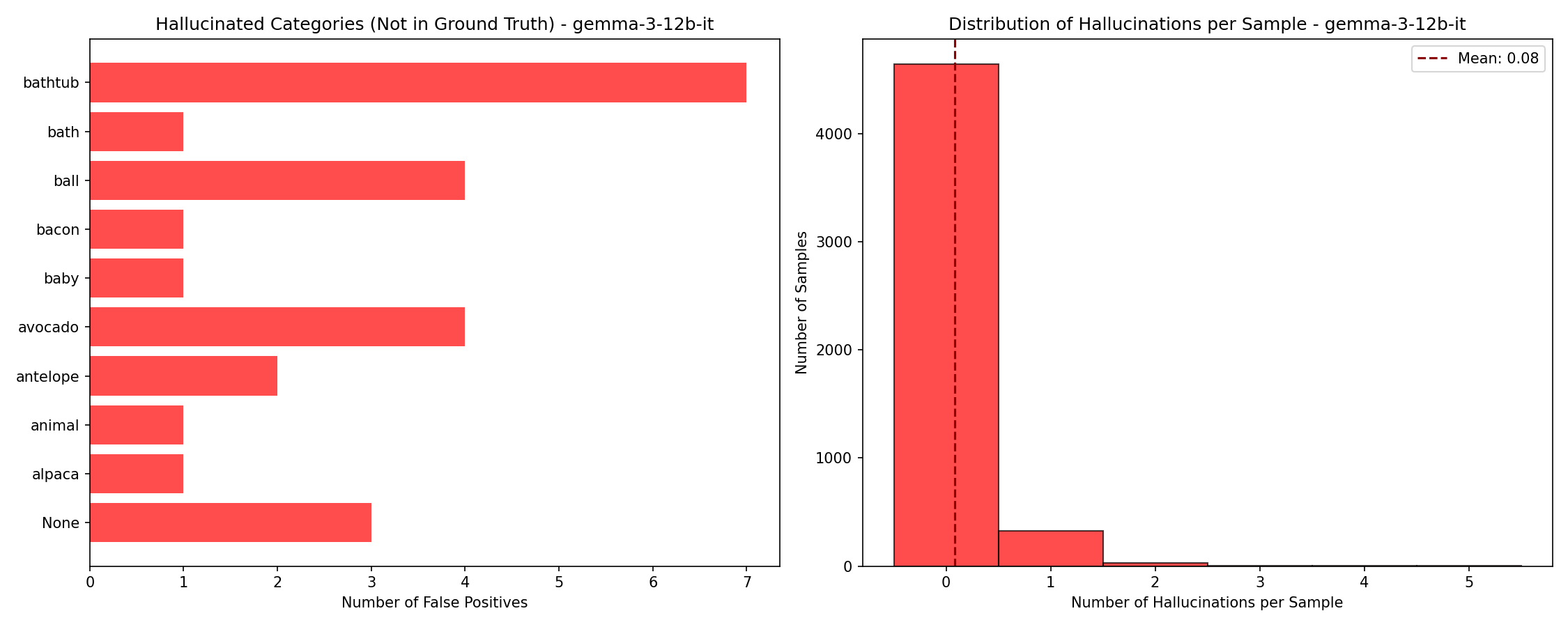

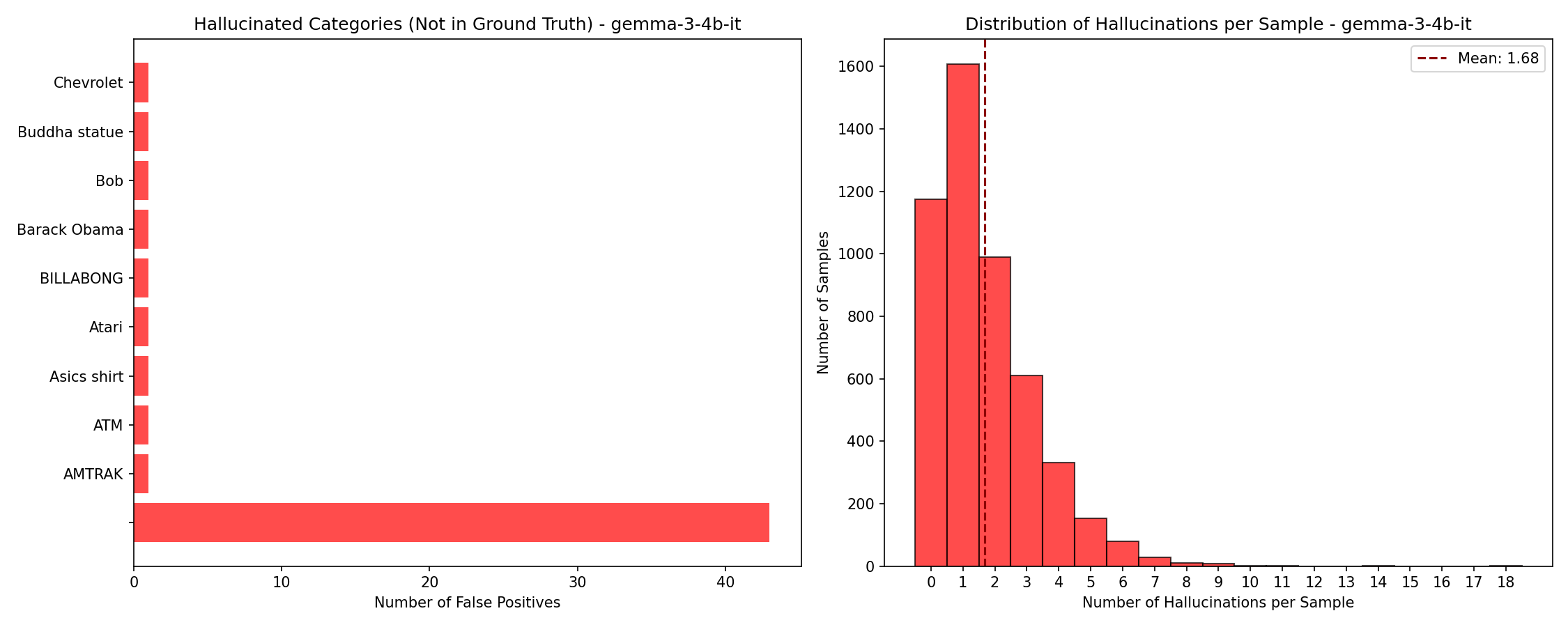

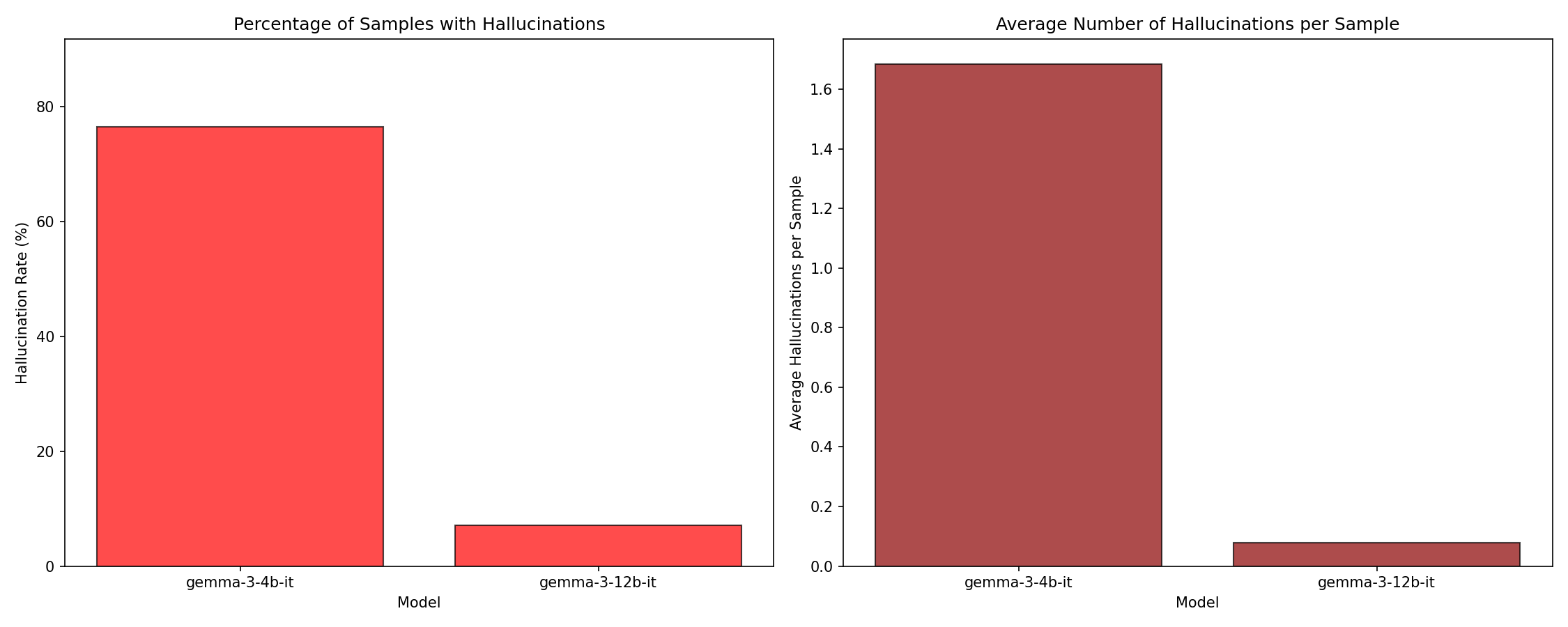

Hallucination Statistics

Hallucinated Categories

1210

Samples with Hallucinations

3826 (76.5%)

Avg Hallucinations/Sample

1.68

Visualizations

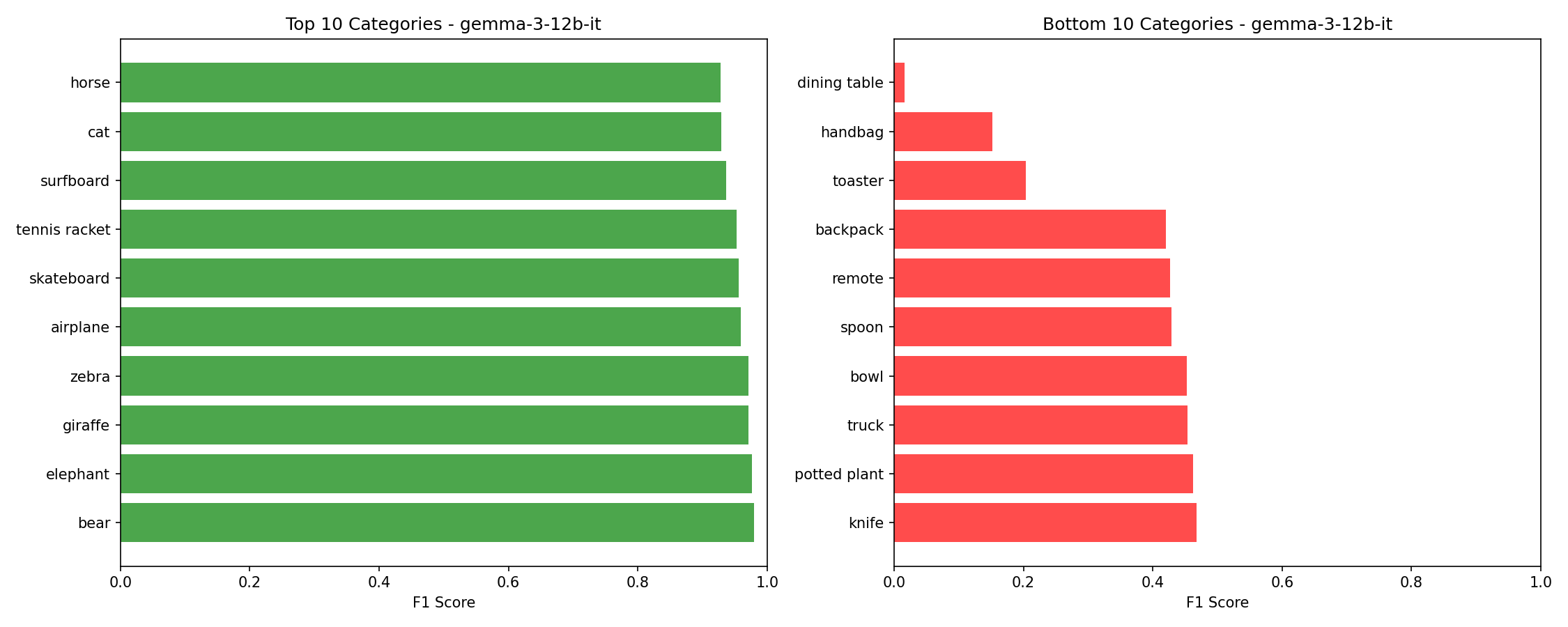

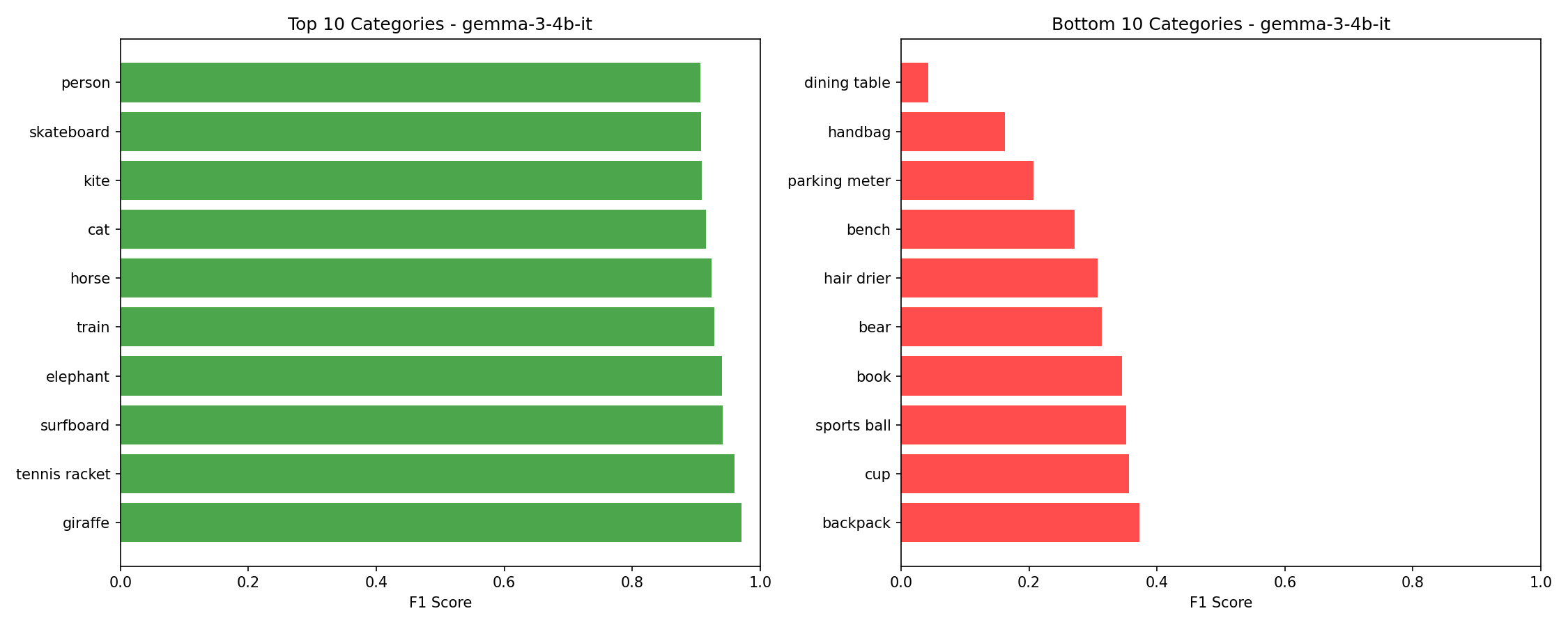

Top Performing Categories

| Category | F1 Score |

|---|---|

| giraffe | 0.971 |

| tennis racket | 0.960 |

| surfboard | 0.942 |

| elephant | 0.940 |

| train | 0.928 |

| horse | 0.924 |

| cat | 0.915 |

| kite | 0.909 |

| skateboard | 0.908 |

| person | 0.906 |

Bottom Performing Categories

| Category | F1 Score |

|---|---|

| backpack | 0.373 |

| cup | 0.356 |

| sports ball | 0.352 |

| book | 0.345 |

| bear | 0.314 |

| hair drier | 0.308 |

| bench | 0.271 |

| parking meter | 0.207 |

| handbag | 0.162 |

| dining table | 0.042 |

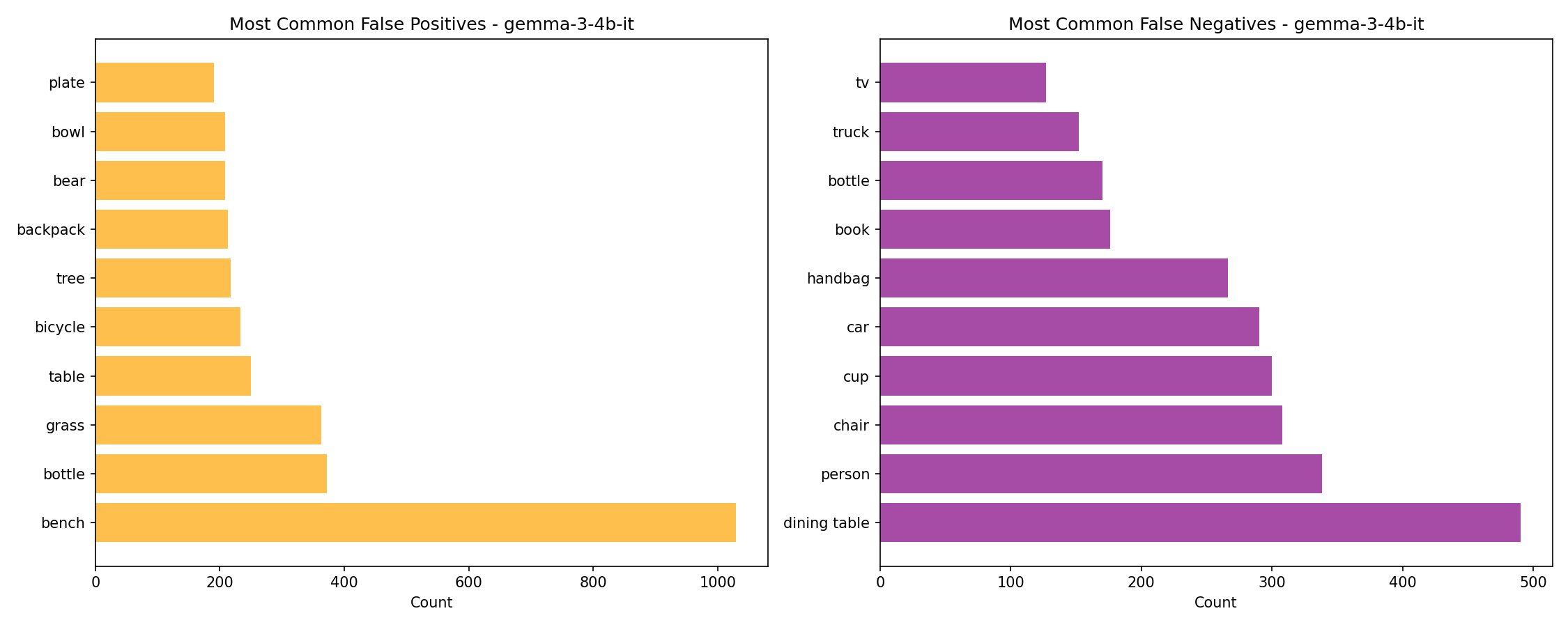

Hallucinated Categories (Not in Ground Truth)

| Category | False Positive Count |

|---|---|

| grass | 363 |

| table | 250 |

| tree | 218 |

| plate | 191 |

| water | 162 |

| building | 161 |

| road | 120 |

| shoes | 119 |

| street | 115 |

| beach | 107 |

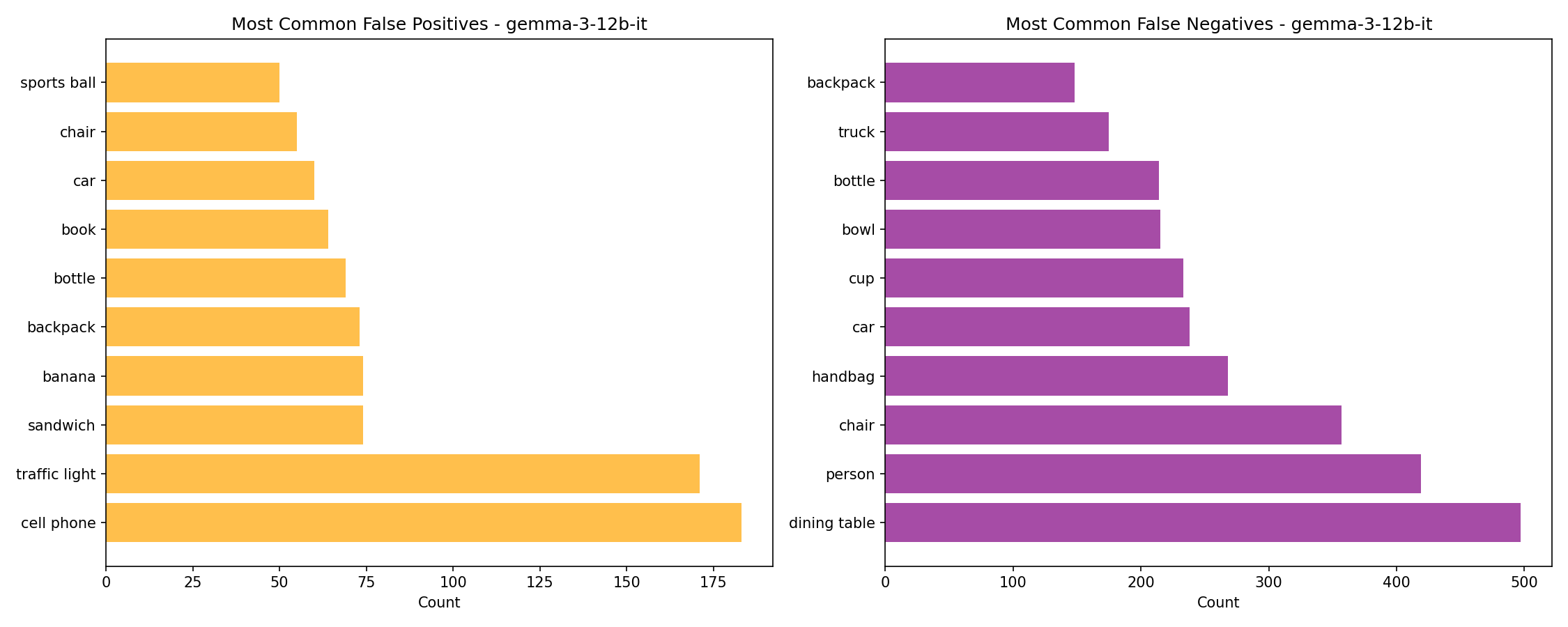

Model Comparison

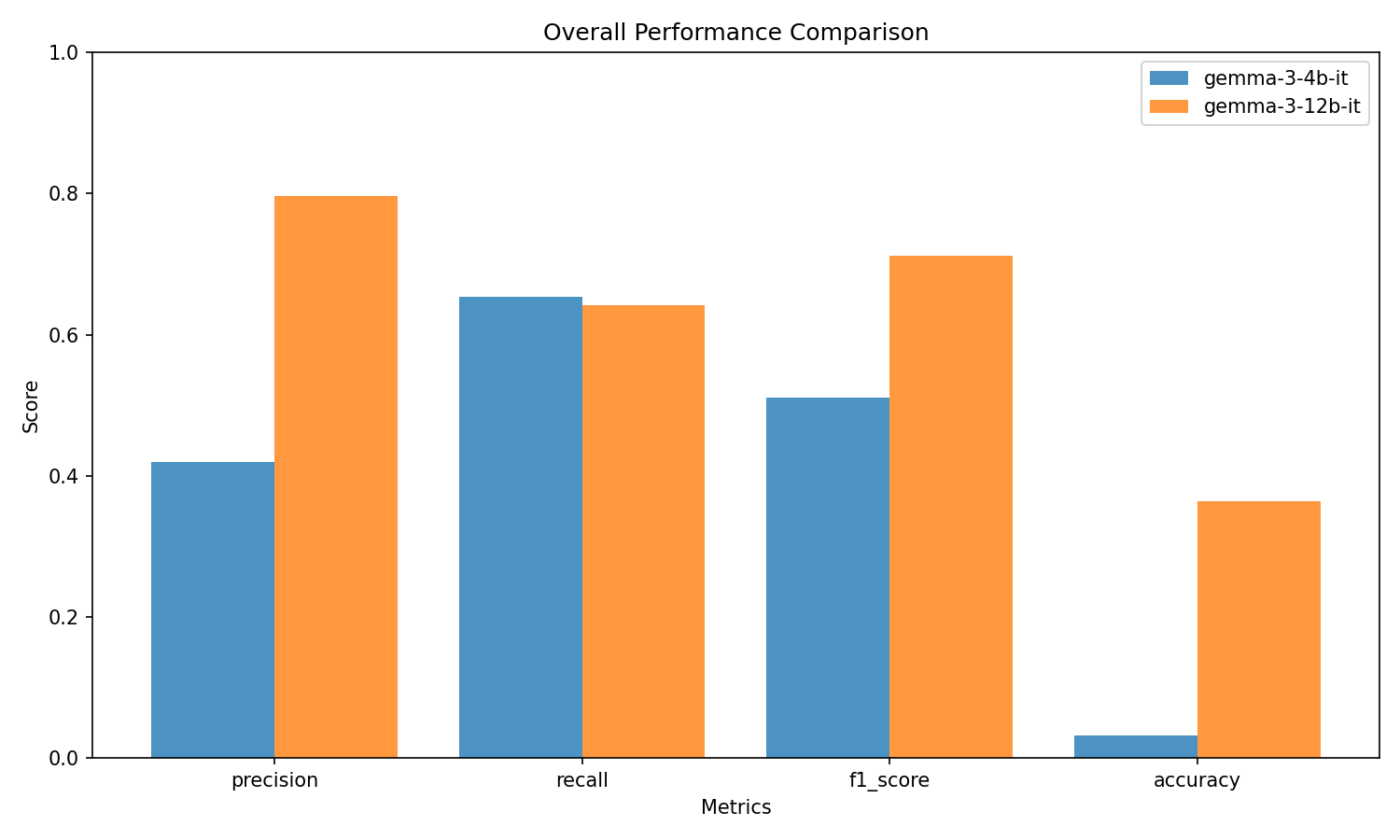

Overall Performance

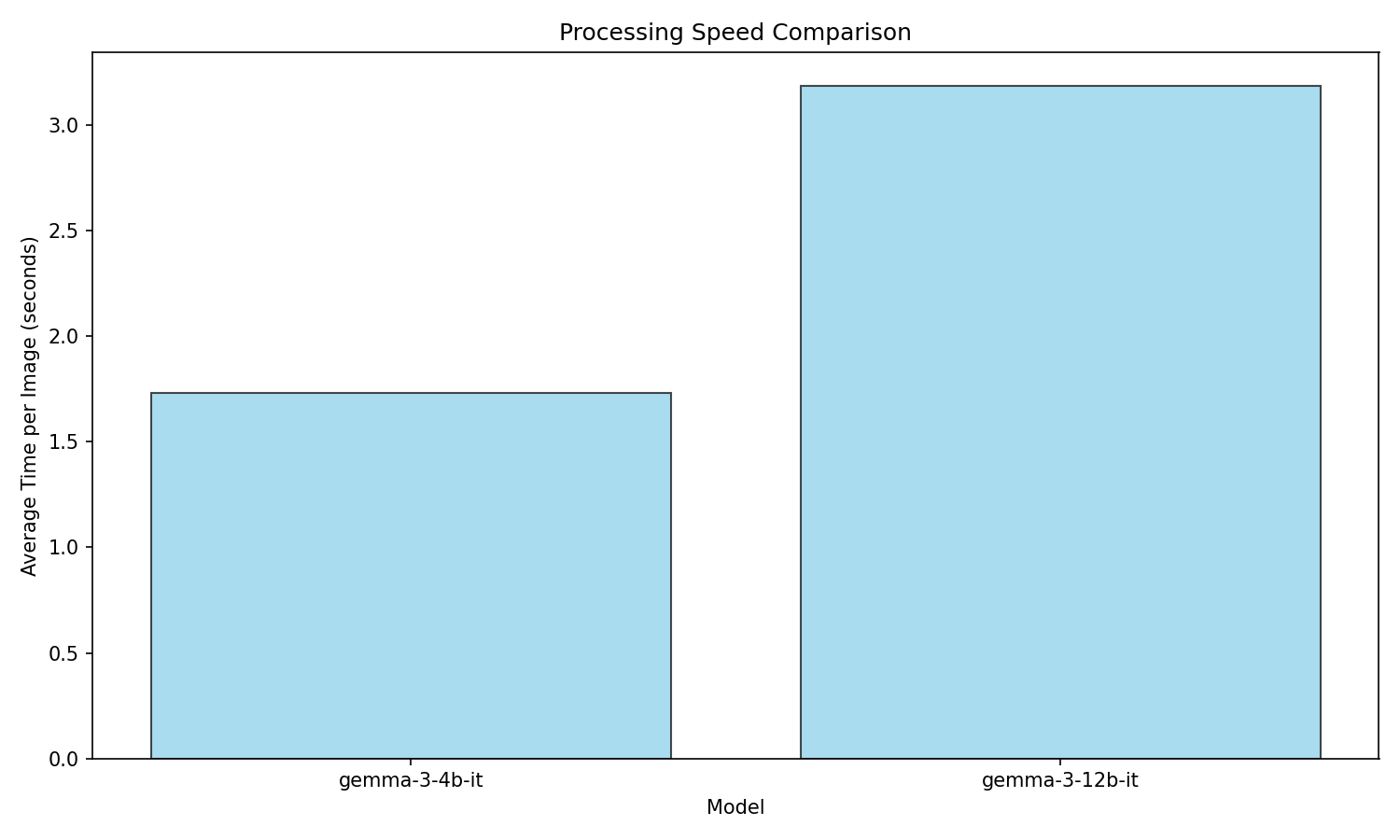

Processing Speed

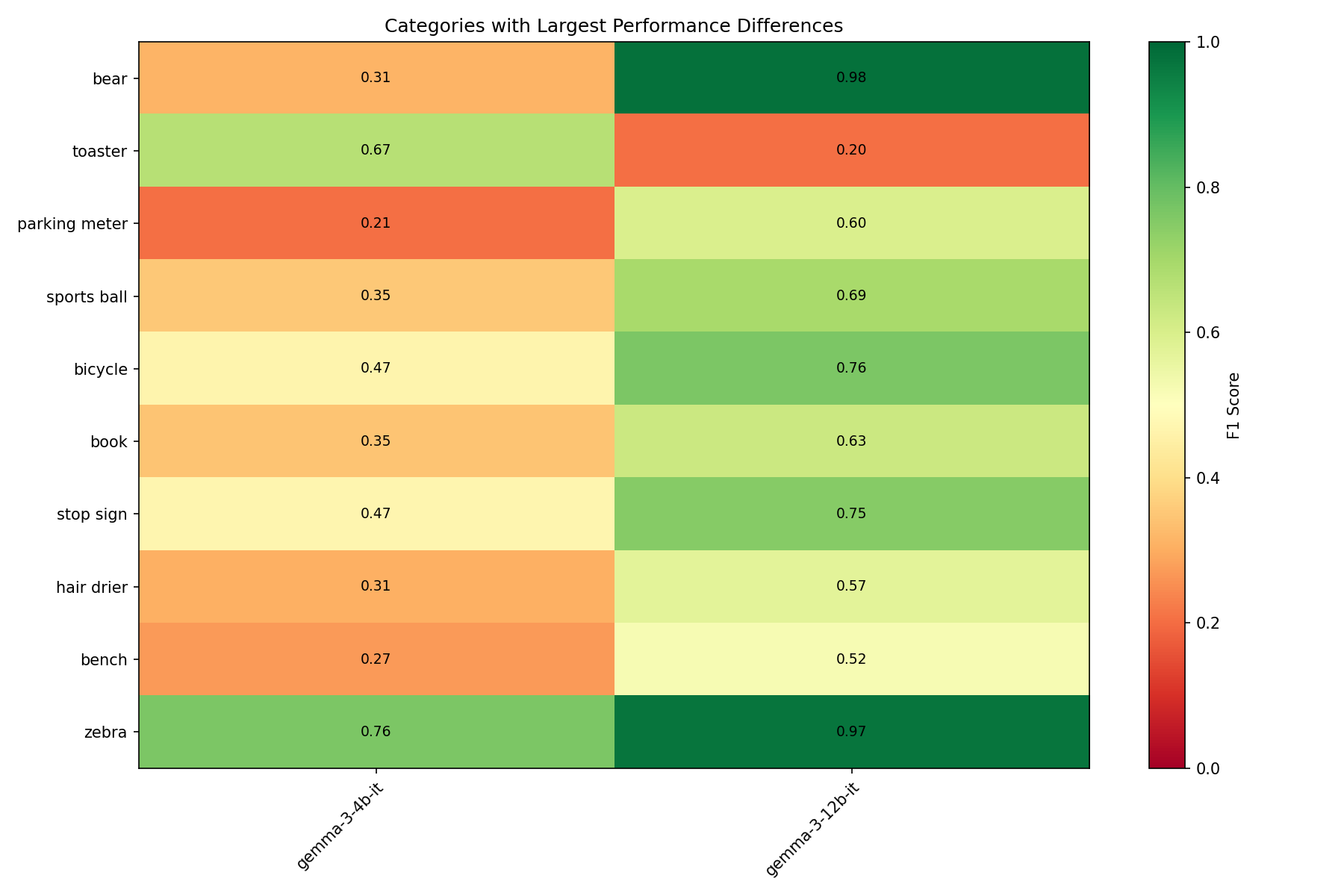

Category Performance Differences

Hallucination Comparison

Detailed Metrics Comparison

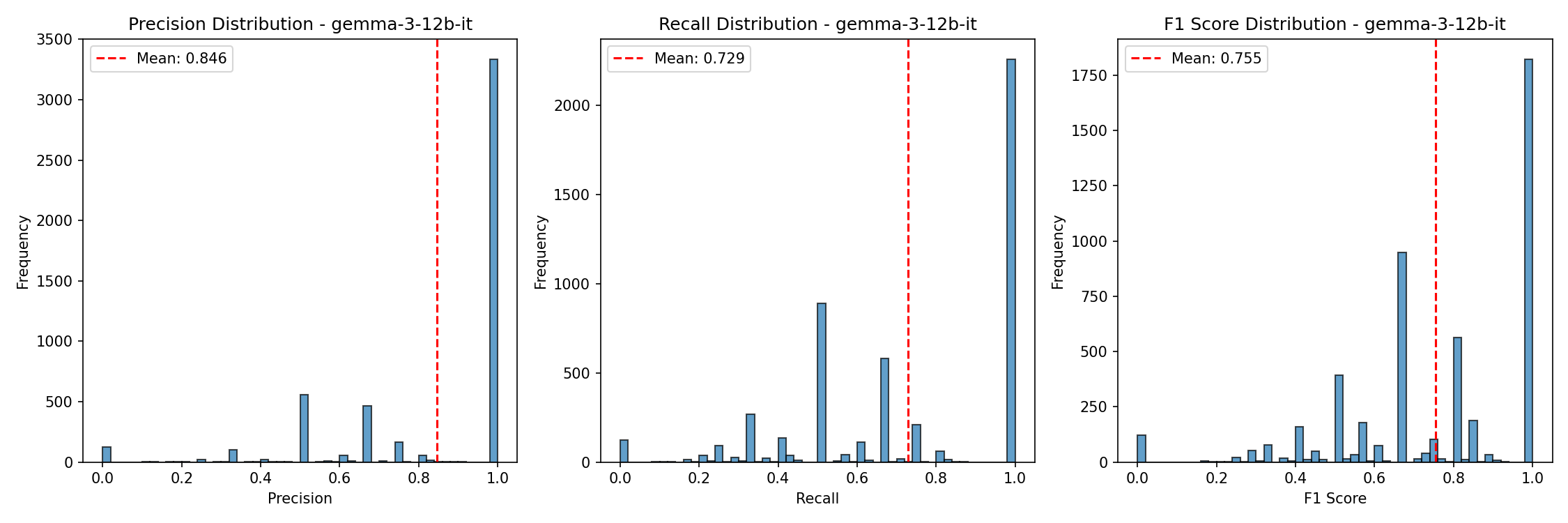

| Model | Precision | Recall | F1 Score | Accuracy |

|---|---|---|---|---|

| gemma-3-4b-it | 0.419 | 0.654 | 0.511 | 0.032 |

| gemma-3-12b-it | 0.797 | 0.642 | 0.711 | 0.365 |